assignments-hw1answers

1. Bayer Mosaics and Interpolation

Most digital cameras actually have a single sensor, and use filters so each sample (pixel?) sees only one color. The common tiling for the pattern of the color is the Bayer Mosaic, which is described in Section 3.8 of Fundamentals of Computer Graphics.

For this question assume pixel values are between 0 and 1.

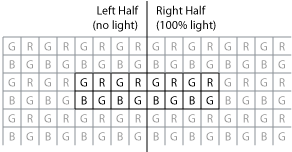

Imagine a Bayer Mosaic sensor where the left half of the image receives no light, and the right half receives enough that it gets 100% (value 1). You can assume the dividing line goes between pixels (not through a column of pixels)

1.A What values will the samples have?

0 0 0 0 1 1 1 1

0 0 0 0 1 1 1 1

1.B. The simplest de-mosaicing method is to interpolate each color independently, to obtain R,G,B for each pixel.

What values (R,G,B) will this provide for this example case? (use linear interpolation. you only need to figure the values for an 8x2 block of pixels, since other values will be similar) What would this look like if you looked closely at the image?

(0,0,0) (0,0,0) (0,0,0) (0, 0.25, 0.5) (0.5, 1, 1) (1,1,1) (1,1,1) (1,1,1)

(0,0,0) (0,0,0) (0,0,0) (0, 0, 0.5) (0.5, 0.75, 1) (1,1,1) (1,1,1) (1,1,1)

Slight blur along the edge (including colors that aren’t actually in the image)

Note: in modern cameras, more sophisticated de-mosaicing algorithms are used.

2. 1D Convolution Practice

Let f, g, and h be the discrete signals:

f = [ 1 2 1 3 1 4 1 5 1 6 2 5 3 4 3 ] g = 1/5 [1 1 1 1 1] h = 1/6 [1 2 3]

For these first 3 parts, compute the ``whole sequence’’ of the convolution:

2.A compute f*g

1/5 * [1 3 4 7 8 11 10 14 12 17 15 19 17 20 17 15 10 7 3]

2.B compute f*h

1/6 * [1 4 8 11 10 15 12 19 14 23 17 27 19 25 20 18 9]

note that if you forget to flip your kernel, you will get something like:

1/6 * [3 8 8 13 10 17 12 21 14 25 19 25 21 23 20 10 3]

For these next 3 parts, assume that f has its ``zero’’ at the firstentry, g and h are ``zero centered’', and compute the convolutionsto provide signals over the same domain as f (your answers shouldbe 15 samples long). For an explanation of the different ways to handle the convolution endpoints see Main.ConvoleEndpoints.

(hint: most of these answers can be copied from 2.A and 2.B)

2.C compute f*g using zero-padding to handle the boundaries

1/5 * [4 7 8 11 10 14 12 17 15 19 17 20 17 15 10]

2.D compute f*g using reflection to handle the boundaries

There are two different answers possible, depending on whether you repeat the edge when you reflect:

1/5 * [7 9 8 11 10 14 12 17 15 19 17 20 17 19 17] (don’t repeat edge)

1/5 * [7 8 8 11 10 14 12 17 15 19 17 20 17 18 17] (repeat edge)

2.E compute f*g using kernel renormalization to handle the boundaries

[4/3 7/4 8/5 11/5 2 14/5 12/5 17/5 3 19/5 17/5 4 17/5 15/4 10/3]

3. Continuous reconstruction

Consider reconstructing the signal from the following samples (the first sample was at t=0):

f = [ 1 2 1 3 1 2 2 ]

Compute the value of the reconstructed signal at t=1.5, t=2, andt=3.25 with the following reconstruction filters (your answer foreach part should be 3 numbers).

3.A The unit box (g(t) = 1 if -.5 <= t < .5, 0 otherwise)

Note: in the book (p. 89), this is the continuous case and r=1/2

Note: in the original assignment, I had the equals on the other side

there's a reason I did it this way, but being consistent with

the book is a better idea

t=1.5: 2 (if you got 1 for this, you probably didn’t flip your box filter)

t=2: 1

t=3.25: 3

3.B The unit tent (g(t) = (1+t) if -1 < t <= 0, 1-t if 0 < t <= 1, and 0 otherwise)

You can also write this as: g(t) = 1-|t| |t|<=1

Note that since when |t|=1, g(t)=0 whether you have < or <=

In the book (p. 89), this is the tent filter of r=1 (I said 1/2 in the original)

t=1.5: 1.5

t=2: 1

t=3.25: 2.5

3.C The box of r=1 (see the book)

t=1.5: 1.5

t=2: 1.5

t=3.25: 2

3.D The tent of r=2 (again see the book)

Note: the assignment originally had r=1, but that was before I realized

that the width notation was different from box and tent

If you did the assignment before seeing this, we won't penalize you.

t=1.5: 1.625

t=2: 1.75

t=3.25: 1.9375

4. Sampling kernels

Consider resampling the following signal:

[ 0 0 4 4 0 0 4 0 4 0 4 0 0 0 4 0 0 0 ]

using the pre-filtering kernel 1/4 [1 2 1]

4.A If you resample the signal at half the sampling rate, what

result would you get?

0 3 1 2 2 2 0 2 0

4.B If you made a small change in how you sampled in 4.A (say,

chose even instead of odd values), would the results be very

much different? What does this say about the adequacy of the

kernel for doing this resampling?

1 3 1 2 2 1 1 1 0

a few values vary, which indicates that there are still some high frequencies in the signal, indicating that our kernel is probably not adequate.

4.C If you resample the signal at 1/3rd the samping rate (pick

every third sample), what result would you get?

0 3 2 2 0 1

4.D If you made a small change in how you sampled in 4.C (say,

chose elements 0,3,6 instead of elements 1,4,7), could the results be very

much different? What does this say about the adequacy of the

kernel for doing this resampling?

1 1 2 2 1 0

Similar explanation as part B - although its even more inadequate

5. Seperable Kernels

A seperable kernel is one where you can achieve a 2D convolution by doing two seperate convolutions, one in each dimension. (or in higher dimensions, an nD convolution by doing n seperate convolutions).

What 2D convolution is achieved by doing the following 1D convolutions in one dimension then the other? Your answer should be a 2D ``matrix'' of numbers (the 2D convolution kernel). 5.A 1/5 [ 1 1 1 1 1 ]

[1 1 1 1 1]

[1 1 1 1 1]

[1 1 1 1 1] * 1/25

[1 1 1 1 1]

[1 1 1 1 1]

5.B 1/16 [ 1 4 6 4 1 ]

[1 4 6 4 1]

[4 16 24 16 4]

[6 24 36 24 6] * 1/256

[4 16 24 16 4]

[1 4 6 4 1]

5.C 1/2 [1 -1 2 -1 1]

[ 1 -1 2 -1 1]

[ -1 1 -2 1 -1]

[ 2 -2 4 -2 2] * 1/4

[ -1 1 -2 1 -1]

[ 1 -1 2 -1 1]

Consider doing an NxN convolution that is seperable on an MxM image. Assume that M >> N. What is the assymptotic complexity (big O) of: 5.D Doing the convolution as 2 1D convolutions (of kernel size N)?

O(M^2 * N)

Each of M^2 pixels need to do ~2N multiplications (ignoring the boundary cases)

5.E Doing the convolution as 1 2D convolution (kernel size of NxN)?

O((M*N)^2)

Each of M^2 pixels need to do ~N^2 multiplications (ignoring the boundary cases)

Your answer should be a function of M and N.

(hint 1: ignore the boundary conditions (since we're talking about

assymptotic complexity) - just assume that the kernel always

overlaps the image - a few edge cases won't matter when M >> N)

(if hint 1 doesn't make sense, think about it this way: in the limit,

how you handle a few edge pixels won't make a difference)

(hint 2: you just need to count the multiplies)